Many of us have been pretty disappointed in the long lead time it takes to get chips from specification into production. For RISC-V devotees, this was brought into clearest focus this year where November of 2021 brought us ratified specification for Vector Computing 1.0, in particular, but we’ve mostly developed via emulated cores in software or FPGAs or through chips like Allwinner’s D1 family of parts which paired a single core with a pre-release version of the Vector spec that was already over a year old when the device shipped. Lucky for us, we may see history repeating in the one year part of that with first Vector 1.0 silicon coming late this calendar year, so likely November or December.

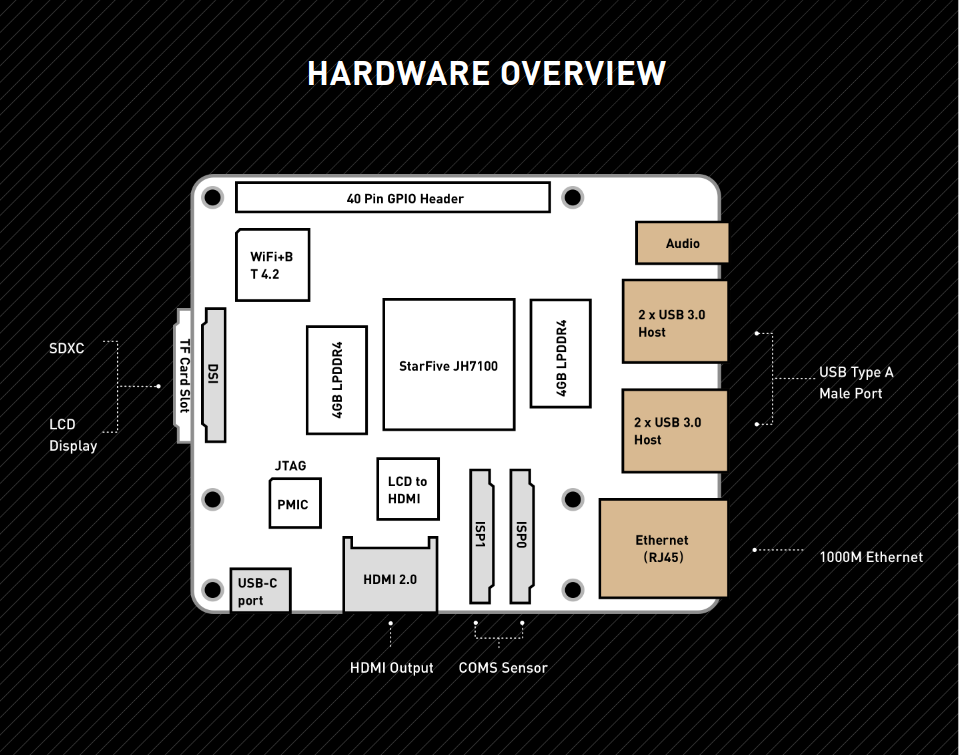

Many of us had hopes that StarFive, with their close ties to IP vendor SiFive, and their collective “dry-run” experience with shipping many hundreds of chips through BeagleV, Starlight, VisionFive which in the upcoming JH-7110 iteration DOES bring around 3D graphics, and four 1.5Ghz cores along with a comfortable (2-8GB) headroom of RAM. The Kickstarter from StarFive was successful with over 2,000 units and that’s easily one of the most anxiously awaited parts of 2022, with Pine64’s fast-following board adding PCIe graphics/other expansion slot,

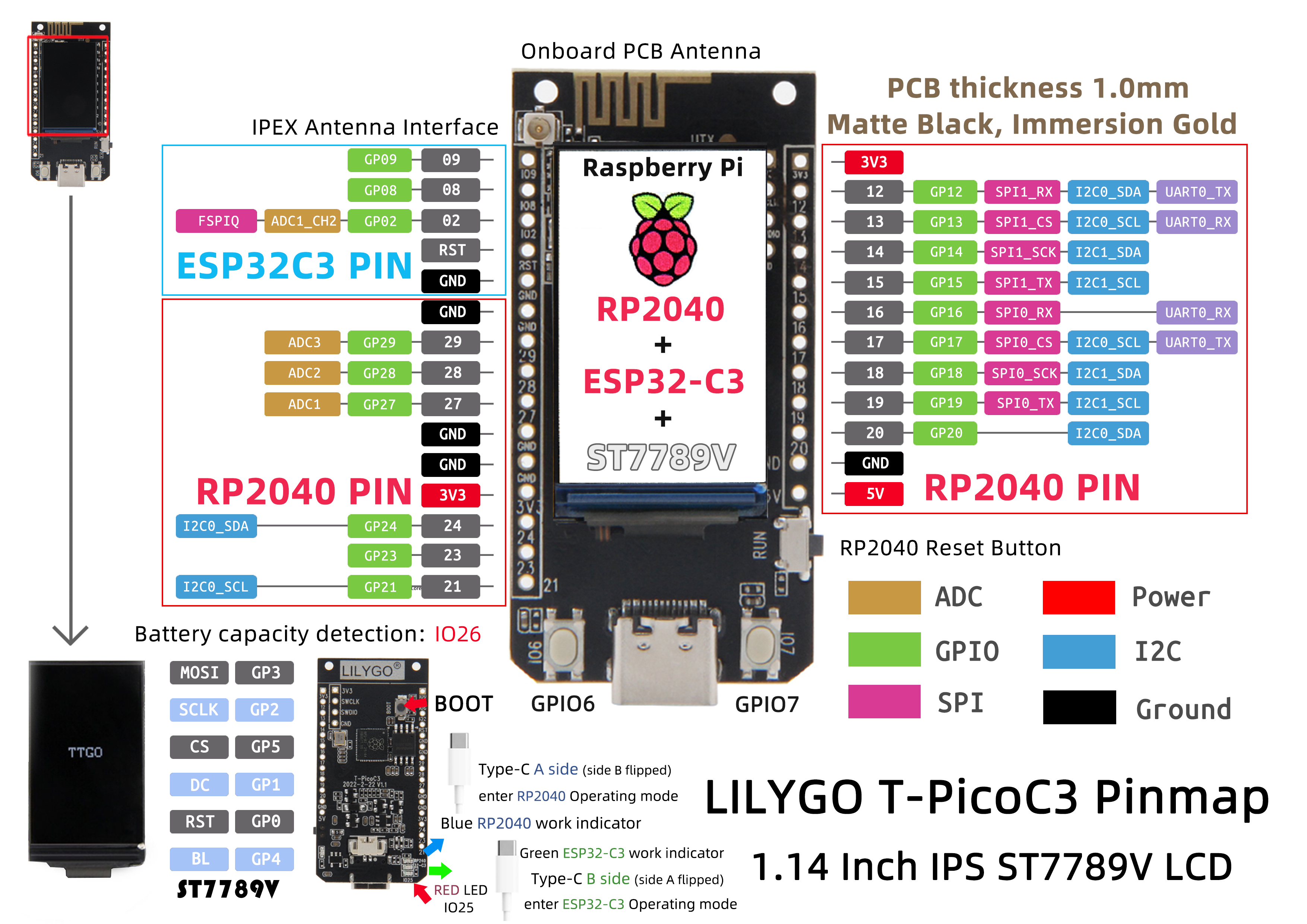

The new part has generated less buzz, because while it has been known for a few months, it was under press embargo until now, It comes from Shenzhen’s Bouffalo Lab which is relatively unknown outside of RISC-V developer circles. They’re very much a Chinese company and their Western presence can be pretty tricky to find a pulse for, but they have a family of developer tools with (mostly) enough English documentation, tools, and support. While they have really inexpensive I/O chips, their chips will be mostly known by readers of this page as being the brains of Pine64’s Pinecone and reduced pin count Pine Nut. In broad strokes, those BL602 and BL604 chips are comparable to the ESP32-C3, with a SiFive E24 core and a basket of I/O, including Bluetooth and WiFi. Cousins BL702 and 706 add more GPIO, may trade WiFi for Zigbee in certain models, and have cost/performance models that make it possible to emulate an FTDI in software, suitable for a $3.59 JTAG board ir drive full size panel displays while feeding WiFi services, GPIO monitoring, and such. They’re very flexible parts.

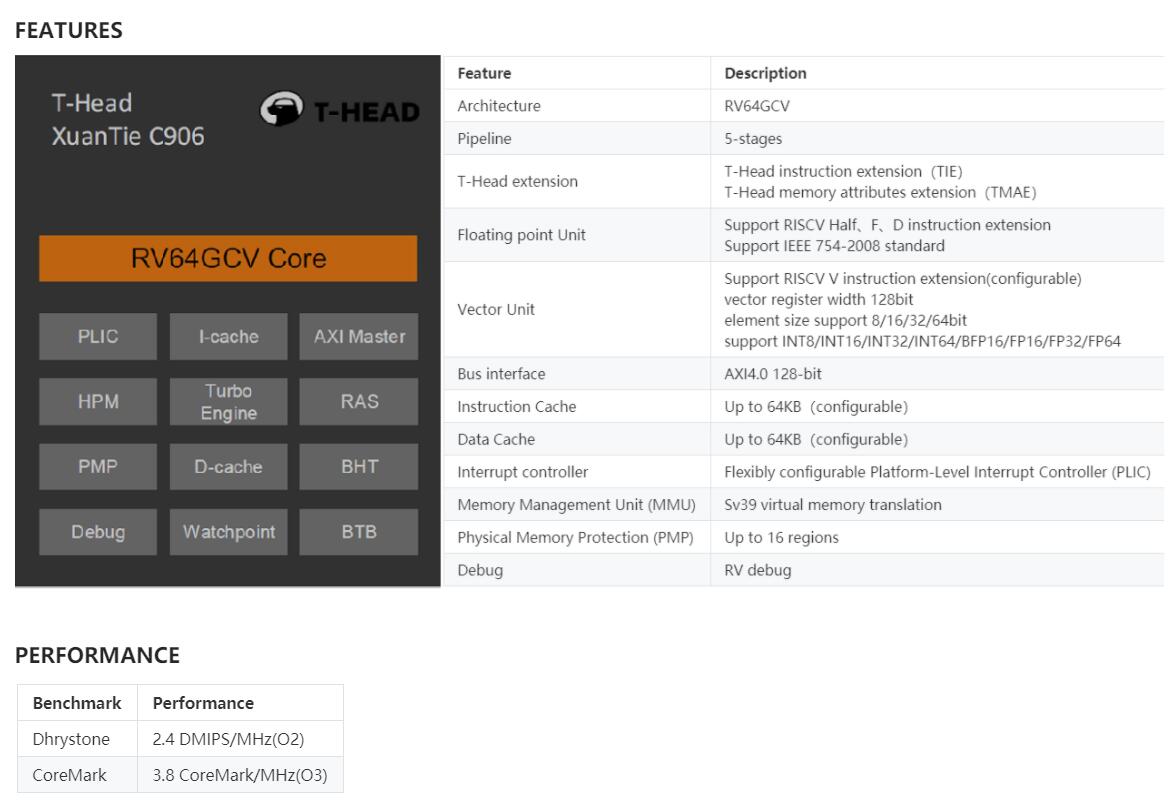

The zinger here is that for BL808, their newest chip (expected “soon”) we leave behind the SiFive cores and go with the cores that were open sourced by Alibaba’s chip division, T-Head about last year. Bouffalo was able to pair T-Head’s experience in high-speed cores with their own experience in fabbing high-volume/high-volume parts, and fuse in value like the new Vector 1.0 specifiction. Now that we have ~18 months or more of experience in simulating and building software for those parts via LLVM and, less so, GCC, that seems like a great partnership.

The coarse-level datasheet is almost self-deprecating. “Take four marginally related compute nodes and attach everything to everything” look:

Bouffalo did what they did best, and Sipeed is on deck to do for this chip what they did to the (then) ground-breaking GD32VF103 (zillions of <$10 RISC-V boards without cables and a very usable SDK) or the K210 – which they morphed into a dozen form factors and married an early Rocket design with a numeric computation unit made FL acceleration/AI accessible to the < $20USD developer in many packages. So what makes BL808 a good date to bring to the computing ball of 202x?

Integration. The likes of Sipeed, Pine64, and others will mount the board to a variety of backing form factors so people wanting access to these can just use them without having to wire-wrap them or hire a high speed digital logic team to take all the high speed timing craziness.

Tool stability. RISC-V is probably the first real ocean of silicon tech that’s had the software team delivering on high before the hardware team could make wafers. RISC-V is simulated, the tools are validated, and these tools are all available at the risk/scale/price point you want to pick.

ZZZZZZ TODO: Insert 3-wide frame of chip cut-ways and QR’s here.

There are already hundreds of pages of documentation available online. It’s probably not the best place, but it’s the first place I’ve seen that’s publicized in a way that doesn’t look like like a leak. :–)

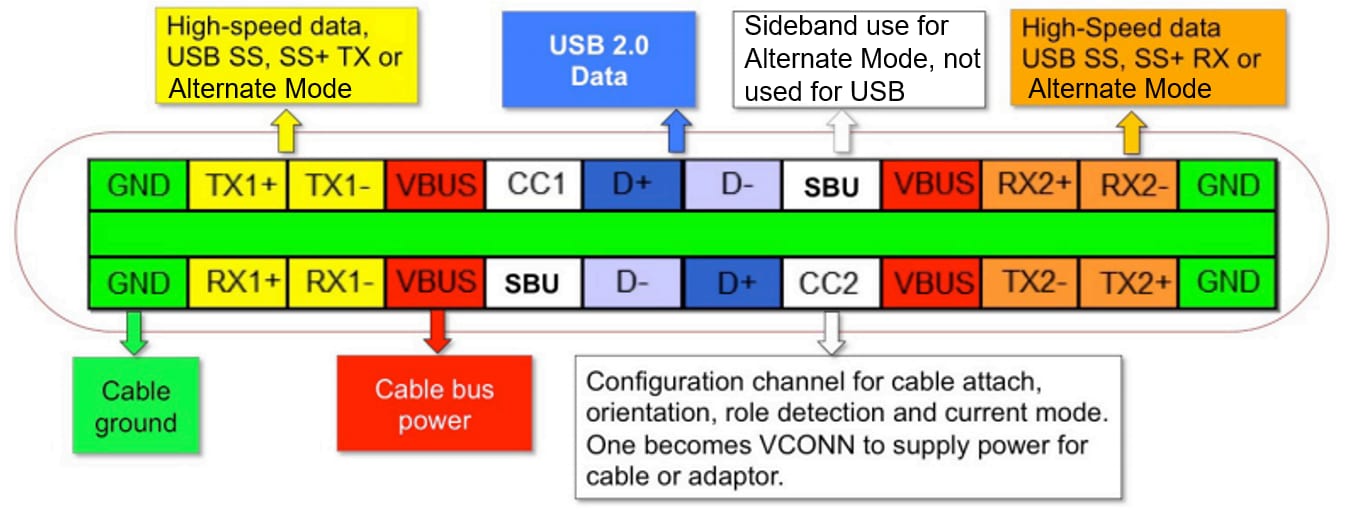

Of course, the chips themselves have RealTimeCounters, 20-channel Direct Memory Access Controllers (as we do) , USB2, JTAG, SPI, four UARTs and all those other creature comforts that we essentially expect to see in our $10 chips these days. (Pricing hasn’t been announced…) This part has so many processing/IO cores that it’s actually hard to distinguish them.

“The wireless subsystem includes a RISC-V 32-bit high-performance CPU, integrated Wi-Fi /BT/Zigbee wireless…”

“The multimedia subsystem includes a RISC-V 64-bit ultra-high-performance CPU and integrates video processing modules such as DVP/CSI/ H264/NPU, which can be widely used in various AI fields such as video surveillance/smart speakers….”

“NPU (numeric processing unit) HW NN (hardware neural networking) co-processor (BLAI-100 – Bouffalo Logic Artificial Intellligence) generally used for AI applications

Of course, there’s also a low-power 32-bit RISC-V unit to babyset THOSE four compute modules, because it’s 2020 and why the hell not!!!

You literally end up with M0 having “32-bit RISC-V CPU with a 5-stage pipeline structure, supports RISC-V 32/16-bit mixed instruction set, contains 64 external interrupt sources, and 4 bits can be used to configure interrupt priority.”

D0 has “a 64-bit RISC-V CPU with a 5-stage pipeline structure, supports the RISC-V RV64IMAFCV instruction architec- ture, contains 67 external interrupt sources, and 3 bits can be used to configure the interrupt priority.”

As a software engineer, your job as a shepherd is to keep all the computing power your customers have being asked to pay for busy, but not overloaded. Don’t awaken a 64-bit core with an FPU fi you can service your immediate need (maybe it’s a temperature sensore recognizing something is hell-bound) can be handled by a mostly 16-bit, integer-only RISC-V part. Of course, lighting up the numeric inference cores brings on a very different source of power and performance tradeoffs.

Of course, the chip has the mandatory boat of timers, PWMs, ethernet (10-100Mbps only) and more. It really is quite ridiculous what a couple of dollars and 88 pins will buy in modern time. It’s an added bonus that these parts are expected to be available with less than a 104-week lead time. 🙂

These look like very cool chips and I look forward to seeing board from the likes o Sipeed, and maybe Pine64 or BeagleV very soon. I haven’t seem formal pricing yet, but I expect to see full boards for less than comparable D1 boards, but to have the added benefits of standard compliance (ahem, those page table bits and jumping the gun on V without pushing it into the reserved opcode space…) over the Allwinner parts. These should be priced way under the JH-7110’s, but have the edge of NPU’s (particularly when pairdd with Sipeed’s new MaixWHATISTHATCALLED?LOOKITUPROBERT) library that makes NPU/Tensor-style programming pretty easy..

Programmers, what tools do you need to see to takme these boards?

Hardware types, what playgrounds can you build for the programmers to fill?

Eventually: cc to lupyuen, caesar, bouffalo team, others for comments…

The board starts at $4.50 USD. Adding the .96 screen makes it $5.99.

The board starts at $4.50 USD. Adding the .96 screen makes it $5.99.

No, no, no. Those are morels. They’re different.

No, no, no. Those are morels. They’re different.